The Data Center Professionals Network

The Global Database of Data Center Industry Expertise

Reclaim Lost Capacity In Your Data Center – It Starts With An Approach That Combines The Best of Engineering and IT

Reclaim Lost Capacity In Your Data Center – It Starts With An Appr...

A funny thing happened to data centers during the past 30 years. They evolved more quickly than the practices now being used to manage them. That, says Sherman Ikemoto, Future Facilities general manager, North America, is because the data center manage- ment processes presently in use evolved from general building management practices. They needed to have evolved from electronics cooling engineering practices. As such, management challenges now exist that the industry wasn’t prepared for; challenges “probably best described as protecting the facility’s ability to safely house IT equipment to protect the compute capacity of the room,” Ikemoto says.

Despite enterprises being aware they need to fully utilize the expensive facilities they’ve built, current techniques for addressing capacity issues lack a desperately needed engineering component, Ikemoto says.

Attend an Upcoming Event to learn more: http://bit.ly/FFmediacenter

The Fundamental Problem:

Current practices often lead to losses of more than 30% of the original Design Compute Capacity in modern mission-critical facilities. In fact, it is safe to say that owner/operators will lose a minimum of 30% of capex from day one. Why hasn’t anyone highlighted such a shocking state of affairs?

Because these losses are due to fragmentation of space, power, and cooling over time and do not become obvious until well into the operational life of the facility. Fragmentation occurs because a typical owner/operator will almost certainly break the original IT load layout planned during the design of the facility. One example is that a facility manager will often assume a 3kW load of blade technology in a cabinet will have the same engineering impact as 3kW of standard server hardware. But while the power draw in both cases is the same, the airflow requirements will be substantially different, leading to cooling imbalances and fragmentation.

Owner/operators are intuitively aware of these losses and have been asking for solutions. It is common to see that large swaths of space not being utilized simply because the facilities manager is afraid of potential hot spots due to lack of available cooling. Many believe that the main reason for such losses is because owner/operators are not effectively monitoring their assets, power, and environment. Because of this, the current market panacea is the use of modern DCIM tools.

However, while accurate monitoring is good and an essential part of managing the facility, it will not help with the losses due to fragmentation. Companies go these routes nonetheless, Ikemoto says, because “there isn’t enough engineering expertise in the industry to champion a more effective approach.”

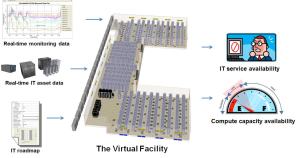

Future Facilities’ approach differs from other DCIM solutions by integrating simulation capabilities using the methodology called Predictive DCIM = Virtual Facility.

“Predictive DCIM is based on simulation techniques that view the engineering impact of any future IT load configuration prior to implementation, thus helping in reducing compute capacity losses and IT equipment resilience issues,” Ikemoto says. If the model predicts that capacity will be destroyed, an engineering fix can be incorporated to avoid the damage that would have been done otherwise.

“Capacity is not a stationary thing. It changes with each change to the IT configuration,” Ikemoto says. Ultimately, “you want to exhaust all your resources at the same time,” something that’s tricky “unless you can see how [space, power, and cooling] relate to each other and to the IT configuration.” Ikemoto likens the situation to a fragmented hard drive where the only way to reclaim space is via defragmentation. Beyond space, though, data center managers must also manage power and cooling together because “you may do a very good job of defragmenting your data center space, but all that defragmented space could be in a spot where there’s no cooling,” Ikemoto says. “And if that’s the case, you don’t have capacity.”

Enter 6SigmaFM:

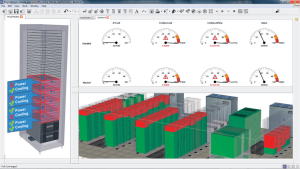

6SigmaFM from Future Facilities gives users a way to predict and visually show how data center changes will influence space, power, cooling, and ultimately capacity. “It’s like being able to walk into your real data center and see space, power, and cooling availability and then identify why they have gone out of alignment and how to realign them so that capacity is always available where you need it,” Ikemoto says.

Future Facilities uses CFD (computational fluid dynamics) analysis to simulate airflow and cooling. This is integrated with space and power simulation to reveal how cooling the invisible aspect of capacity is redistributed by the IT configuration relative to space and power. This destroys rows of capacity on the data center floor and capacity associated with individual u-slots inside of cabinets and 6SigmaFM and its related tools work for new data center builds as well as for ones that have been in operation for years, Ikemoto says, helping those data centers “establish a new roadmap where it not only upgrades and changes IT equipment as usual but integrates solutions to reclaim capacity as it goes.”

“Predictive DCIM is based on simulation techniques that view the engineering impact of any future IT load configuration prior to implementation, thus helping in reducing compute capacity losses and IT equipment resilience issues,” - Future Facilities’ Sherman Ikemoto

Welcome to

The Data Center Professionals Network

Connecting data center industry professionals worldwide. Free membership for eligible professionals.

Events

Follow Us

© 2026 Created by DCPNet Admin.

Powered by

![]()

You need to be a member of The Data Center Professionals Network to add comments!

Join The Data Center Professionals Network